Modernizing Our Video Analytics Stack: From Rails to Node.js

We recently completed a significant migration of our Surfmeter Client Analytics backend from Ruby on Rails and Postgres to a modern Node.js-based architecture based on Express and BullMQ. Here’s how we approached this migration and what we learned along the way. We hope this blog post will be useful to others who are considering a similar migration!

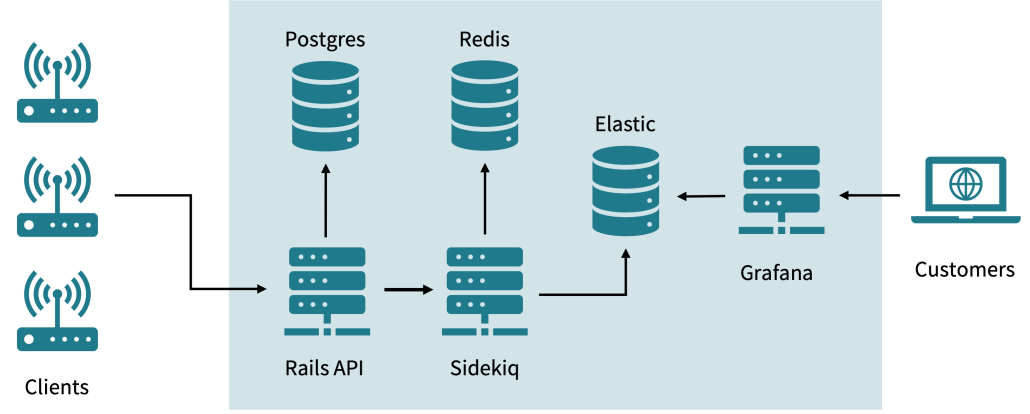

Our Existing Stack

Surfmeter is based on a client-server architecture, where our clients are Surfmeter-equipped devices communicating with a central server. Our original stack has served us well for years, handling video quality, web performance, and network-based measurements for major ISPs — primarily based on an active probing approach. Here, the use case consists of dozens or maybe hundreds of probes submitting their measurement data on a scheduled basis to our central server. We use the following stack:

- Web server: we ingest data from a Ruby on Rails web server — puma, to be precise — running in multiple processes

- Postgres: we store everything in a single-node SQL database. Our REST API is based on objects, and so we also save these records in a normalized database scheme. This allows us to semantically store and query the data (e.g., one video measurement has one video; one measurement has many statistics, etc.). This approach allows complex data analysis queries and, generally, creates lower data storage requirements.

- Job processing: Sidekiq, a popular Ruby gem for asynchronous job processing, is used to handle various data calculation workloads with multiple parallel workers. These include:

- Statistics processing, including video QoE calculations

- IP address resolution and anonymization via our own IP resolver implementation

- Geolocation lookups and reverse-geocoding

- Creation of flat data and indexing into Elasticsearch

- Redis: Sidekiq uses Redis for job management and caching (although we are considering moving over to Dragonfly)

- Elasticsearch: we use an ELK (Elasticsearch, Logstash and Kibana) stack for analytics and reporting, in conjunction with Grafana for visualization. The complex SQL-like data, enriched with statistics and geolocation, is stored here in a flat format for fast querying and aggregation-based statistics.

Our stack before the migration.

Data persistence is handled both by Postgres and Elasticsearch, but we occasionally clean up the database after everything’s been indexed into Elasticsearch. Our database holds more granular data, but we do not need to keep it for a long time.

You might ask: why Rails? It’s just what we are used to. When you build software in a startup, it is vital to not waste time on adopting the latest and greatest technology just for the sake of it — instead, you have to build on what you know best and what gets the job done. Rails still is, in our opinion, a great framework for building featureful web applications, where you can iterate quickly. And we’re still proud of our app, which is covered extensively by unit tests, and has never failed us so far.

Why We Migrated

Last year we introduced a new set of SDKs for client analytics — allowing content providers to directly integrate Surfmeter-based quality monitoring into their own Android, iOS, and web-native implementations. This is a different use case compared to the active probing scenario: now, several thousands of clients are equipped with our SDK to continuously send their measurement data to our server, and this type of data is largely unpredictable. It is sent more frequently, and more clients are active during peak times.

As our measurement volume grew and real-time processing requirements became more demanding, we needed an architecture that would:

- Handle high-throughput measurement ingestion more efficiently

- Process asynchronous workloads with better performance

- Scale horizontally with less overhead

- Be easy to maintain

As we deployed our existing Rails-based stack for a first customer, we noticed issues with high CPU load and latency for measurement processing. We found that there were two main bottlenecks in the current setup for this type of workload:

- Slow Rails web server, leading to low throughput and high latency for requests, many of them timing out after 15 seconds.

- Slow job processing, causing a buildup in jobs that could not be processed in time, resulting in indexing delays

As data volumes grew more and more — and with the help of profiling tools like Elastic APM — we found the main culprit to be the SQL database: during storing of the objects, we SELECT and INSERT a lot of data from various tables. These queries became slow over time, due to a high number of JOIN statements needed. Essentially, we found that the database was not able to handle the load, even when giving the server more CPU resources.

To provide short-term fixes, we carefully examined our options and tried to start with the most effective solutions first. It’s the Pareto principle at work, again! We did the following:

- We moved from Ruby 3.1 to Ruby 3.2 with jemalloc and YJIT enabled. These seemingly minor changes can create huge performance gains.

- We optimized the usage of web server and worker processes/threads for Puma and Sidekiq. This is often a source of confusion for developers, because just using more workers is not always the solution — instead it might create more overhead.

- We added indices where needed to optimize the database performance. Often overlooked, indices are a key part of any database performance tuning.

- We removed a legacy microservice for statistics calculation and replaced it by a command-line call. In general, we — like others before — believe that microservices are just another layer of indirection and confusion, and we’d rather work with a monolithic server as much as possible.

- We got rid of a persisted data model for statistics, instead calculating them on-the-fly and in-memory only. Before, each statistic value (e.g., average bitrate) was stored as a separate row in the database, requiring a costly select and insert statement; now we use Postgres JSONB columns to store all statistics in a single column tied to a measurement, all in one go.

But overall, the problems were not solved, and we reached a point of diminishing returns. We certainly wouldn’t be able to handle twice as many clients, let alone 10x more. So we went for something completely different!

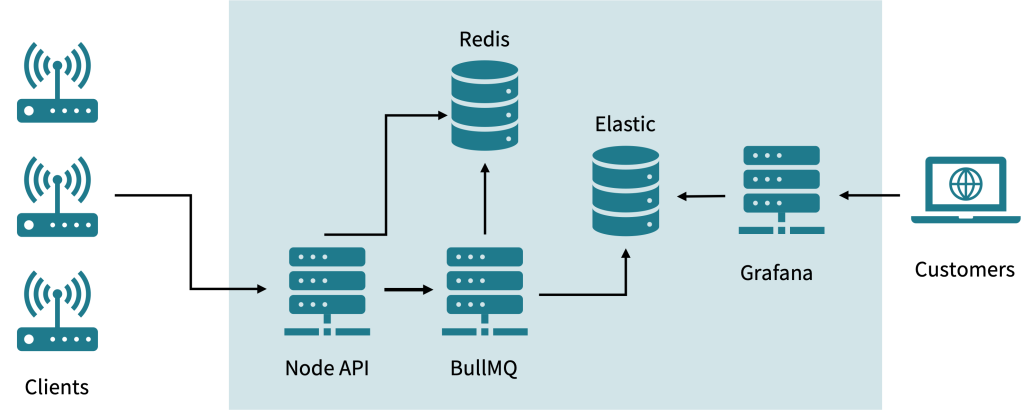

Our New Stack

We thought about how to best handle the high-throughput measurement ingestion and the complex data processing requirements. Based on research on what others in the field typically do, and talks with experts, we decided to go for a Node.js-based server with a Redis-first architecture. NoSQL, essentially. At the core, it was important to eliminate the SQL database. In fact, we were sure we wouldn’t even need it in the long run, because for client analytics-type data, persistence in the ELK cluster was sufficient. The raw data had to be persisted only temporarily while statistics were being calculated, and could then be evicted.

Our stack after the migration.

But first, the server: the reason for migrating to Node.js was that, primarily, our client libraries are written in TypeScript, and so we would benefit from a more consistent developer experience, where we could share types between the clients and server.

In general, in the last few years, we’ve found TypeScript to boost our productivity and code quality, compared to Ruby, which has very little type safety and lets you make silly mistakes all the time. This has become better with the Ruby LSP and tools like Sorbet, but it’s still not as good as TypeScript.

In general, Node.js is a faster engine for handling requests compared to a plain Ruby server. We settled for good old Express.js, which is a mature and battle-tested framework for building web servers. There might be faster servers — but we have to test them first. Libraries like Zod help with request validation, and we use a custom logger (built on top of Pino) to handle request and response logging in a consistent way.

Focusing on the client analytics use case only, we could also simplify the data model, because we could now store all data with a low footprint in Redis. Every measurement just became one hash entry in Redis; we would only need to persist the data in Elasticsearch for longer-term storage, and could then delete the data from Redis automatically via its expiration mechanism. Being able to reuse Redis would also mean very little maintenance overhead for us, as we already have a Redis cluster in place and configured. We would only have to swap out the web server component, and keep the remaining services running as-is (perhaps with some minor tuning in terms of resource consumption).

The SQL database could still be used for storing infrequently changing data like API keys. Since these could be cached in-memory, we wouldn’t need to query the database often.

Since Sidekiq does not work with Node.js, we had to find a replacement for the job processing. We found that BullMQ was a good fit for our needs. It is a TypeScript-based library that works with Redis as a backend. Porting our worker jobs over from Rails was quite straightforward, because we had 100% test coverage for the existing stack, and we knew what output we expected from the new workers. Furthermore, our video-quality related statistics code was available already in the form of native JavaScript code, so we could skip calling a microservice or command-line program, and instead execute each calculation directly, within less than 10 milliseconds, compared to the 200ms+ it took with Rails/Sidekiq (with all the network and database overhead).

LLMs and AI-assisted coding tools like Anthropic’s Claude and Cursor have helped us with migrating the core functionality, by referencing the existing code and using the type structure to guide the LLM in creating the right outputs. Right at the start of the migration, we set up a test framework to ensure we produced the same data as with our old stack — testing with vitest has been a breeze. In general, the whole developer experience was positive for us, with bugs found early due to the strong type system offered by TypeScript and BullMQ.

Results, and Where To Go From Here?

We wrote the whole new pipeline in less than 10 days! This included the server, the job processing, and the data model. We were able to migrate all our existing clients to the new system, and we’re now happily ingesting data and watching as the new workers process the measurements.

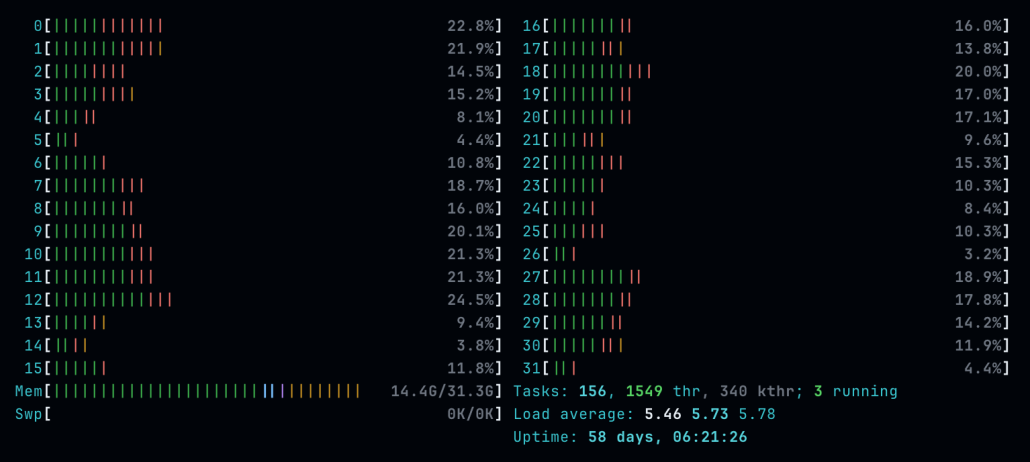

The CPU load has dropped significantly, from an almost unbearable 100% to a more manageable 5-10% on average. Memory consumption is also much lower, since most of it was held by Postgres. Our memory footprint was reduced by 75% or more. This means we can now easily scale to 10x the clients on the same hardware — and that is without any performance tuning.

This is more like what we want to see on a server.

Of course, our journey is not over yet. Here are some important paths forward:

- We will improve the operational monitoring of the stack and tune the number of workers, memory consumption, etc.

- We are now very positive about possibly migrating our other APIs over to the new architecture, eventually dropping Rails and the SQL database altogether.

- Another aspect is scalability: currently we scale horizontally by adding more compute to a single (physical or virtualized) server. This has its benefits, because we can easily offer on-premises deployments and keep the costs down. It’s also easier to understand a system as a whole when it is not distributed across multiple services within a Kubernetes cluster. (In fact, we often hear terrible stories about startup onboarding k8s too early in the process, and then having to deal with the complexity of managing a distributed system.) However, in the long run, we want to move to a more cloud-native architecture, where we can scale horizontally by just adding more servers as demand grows.

- Data pipelines built on top of Kafka would be a good addition, as they would allow us to handle the high-throughput ingestion of data from our clients in a more resilient way.

But these are topics for another blog post!

So, to summarize, this migration set us up for future growth and enables us to:

- Handle larger client/measurement volumes

- Add new video quality metrics and complex computations more easily

- Scale our infrastructure more cost-effectively

- Implement new features faster

The new Node.js-based architecture provides a solid foundation for our video quality measurement platform, allowing us to better serve our ISP and telco customers with more reliable and scalable analytics.