Cloud Gaming QoE measurements on 5G and DSL — how fast is good enough?

If you’ve followed tech news in the past years, you will have probably noticed cloud gaming as one of the new and emerging trends. With platforms like GeForce Now (NVIDIA), Stadia (from Google), or PlayStation Now (from Sony), you can access gaming content without having to own expensive gaming equipment. The gaming providers simply send a pre-encoded video to your machine — all you have to do is play the game.

But how good is the quality of such solutions? It certainly depends on the implementation of the app itself, but also on your network conditions. This is why we at AVEQ developed a solution to measure the quality of cloud gaming — we did it together with an ISP and a cloud gaming operator, to fully leverage the knowledge and expertise of all involved parties. Here we describe our solution, the test setup, and how we found out how well 5G and DSL can work for such services, including what ranges of latency to expect.

What Factors impact Cloud Gaming QoE?

In a previous post we’ve already given a general overview of cloud gaming and what affects the quality that end users will get. Basically, the encoding settings at the server side, the network conditions during the transmission, and the type of game being played will all impact what the customer will experience. However, especially for novel application areas like cloud gaming, the challenge is to even be able to measure the parameters that are important for customer satisfaction.

Cloud gaming operators generally advise that limited bandwidth (i.e., anything below 10 MBit/s) could lead to suboptimal performance or result in low resolutions (less than 720p), which customers will notice as a blurry video stream. For 4K and 60 fps, bandwidths exceeding 40 MBit/s were sometimes recommended. This is certainly not yet feasible on all types of networks and in all situations. What’s more, the latency (i.e., a high ping) can have a much bigger impact on how the game feels. If the latency is generally high or varies too much, the game can become unplayable.How can we quantify the quality of cloud gaming? In classic video-on-demand streaming, where the industry generally knows what conditions to expect from customers’ Internet connections, adaptive streaming technologies have helped reduce buffering degradations to a minimum. From our own measurements, we know that users in Germany typically experience only very short buffering — if at all. Streaming analytics solutions are generally available, and most of them deliver the basic KPIs that you need. But not for cloud gaming!

Gaming Measurements — tailor-made by AVEQ

To find a way to measure what’s important, we set out to measure the quality of cloud gaming — in particular, a VR gaming solution from a proprietary vendor.

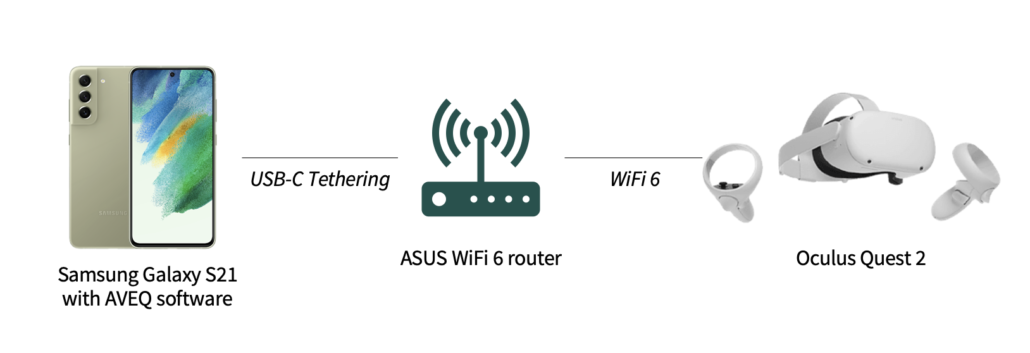

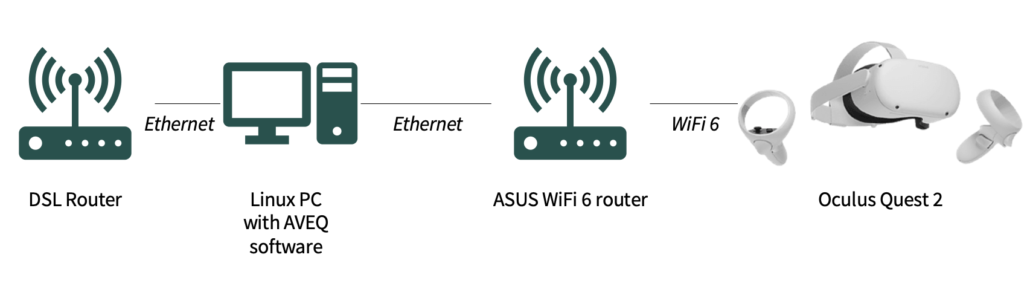

The games themselves could be played through an Oculus Quest 2, either connected to a Samsung Galaxy S21 mobile phone for 5G connection, or a regular Linux-based PC acting as a bridge to a DSL router. Since the VR gaming app ran on the Oculus device, it could therefore use different network connections offered through the phone. We wanted to know how good the performance and quality of the gaming itself was, as mediated by the network connection.

As explained in our previous blog article, at AVEQ we developed a custom C++-based application for network monitoring of streaming quality, tuned for specific gaming services. The basic idea was this:

- Passively measure the traffic passing through the device

- Extract the parameters that are needed to estimate the overall gaming quality

- Run a QoE model on those parameters to get a high-level view of the quality

Our application works primarily on Android-based mobile devices, since that enabled us to measure the quality at the device that received the streams, e.g. via 5G — here on the Samsung S21. However, due to our application’s modular and cross-platform nature, it could also run on Linux-based PCs that act as bridges, which we did for the DSL-based tests.

The tests were set up by the ISP in their laboratory environment. A dedicated 5G Standalone Radio (SA) testbed was created for the mobile tests, but tests could also be conducted on the regular public 5G network.

The DSL-based tests used a regular DSL router, as one would find at customer homes. The Linux PC acted as a bridge through which we could also perform traffic shaping — for instance, to downgrade the available bandwidth or increase the latency and jitter of transmitted packets.

In terms of mobile solutions, we’re also lucky to be able to rely on exciting open-source projects like PCAPdroid — check it out if you are looking to record traffic on Android devices. The software is extremely feature-rich and well-maintained, and we’ve been happy to have Emanuele Faranda as lead developer supporting us with some special issues.

How can you get all the QoE-relevant information?

We quickly noticed that not all the information we needed was available from the transmitted network streams — either because of encryption or otherwise undecipherable message exchanges. Together with the team developing the VR game application under study, we therefore implemented a new protocol for exchanging quality-relevant KPIs over the network, to be read by third-party applications wanting to measure and improve the underlying network performance.

Our new protocol for gaming-related KPIs is named GQOE — short for Gaming Quality of Experience. We’ve presented an initial version of the protocol at one of the last meetings of the Video Quality Experts Group (VQEG) and received good feedback from the experts attending the session. A draft specification is available online.

In the following figure you’ll see a simplified overview of how it works. Gaming traffic is exchanged between the phone and the gaming server through the public Internet. The GQOE messages are transmitted alongside the traffic in an off-band manner and can be parsed by monitoring probes along the way. In our software, we also implemented a GQOE parser in addition to the parsers we had already implemented for other types of streams.

Based on the GQOE message format, we could successfully calculate the quality of a gaming session based on the ITU-T Rec. G.1072 QoE model for gaming. We addressed this in our previous post already, and we found that while it’s not an optimal solution for these types of applications, it still gives us a good first indication of the user experience. While the model was initially developed for cloud gaming, its scope was a bit too narrow for our use case, and it does not yet support VR gaming as an extension. Hence, the MOS ratings we acquired via the model have to be taken with a grain of salt. Certainly, the model has to be extended — something we know ITU-T is currently addressing in dedicated work items.

Test Results

Here is an overview of some results we obtained with the software. Now, these results should be serving as an illustration of what we can measure with our software. We certainly don’t want to conclude that VR cloud gaming generally works better or worse with one technology compared to another.

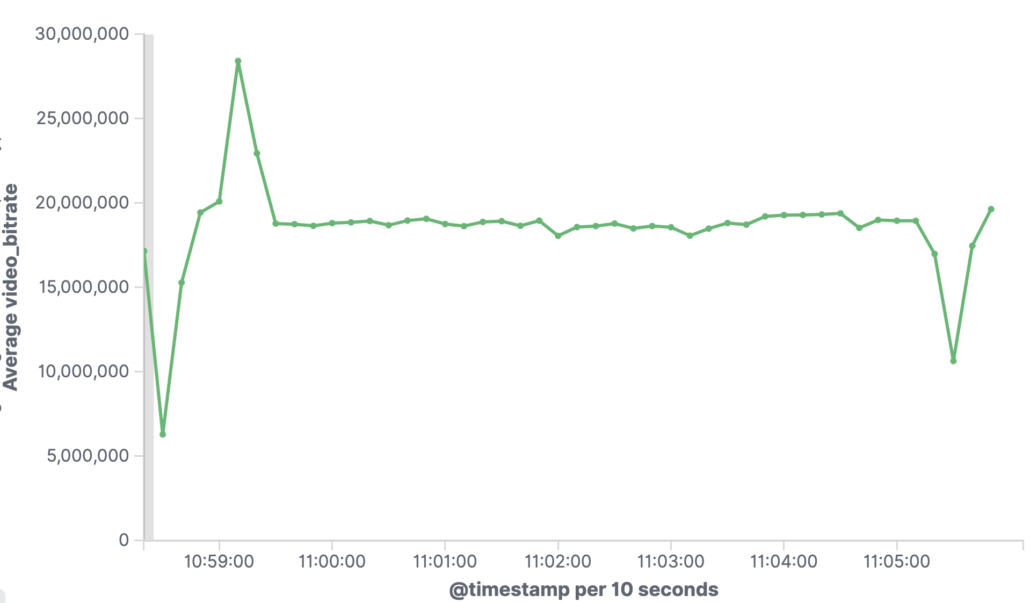

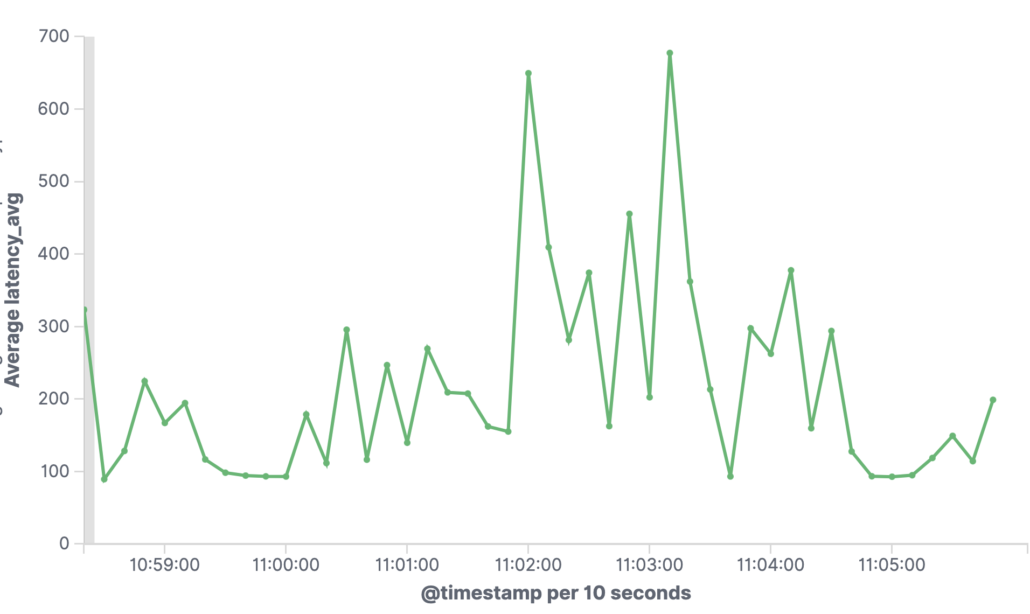

First, we look at the DSL-based results, stemming from a 50 MBit/s VDSL connection. The next four figures show these KPIs and a QoE value:

- Bitrate

- Latency

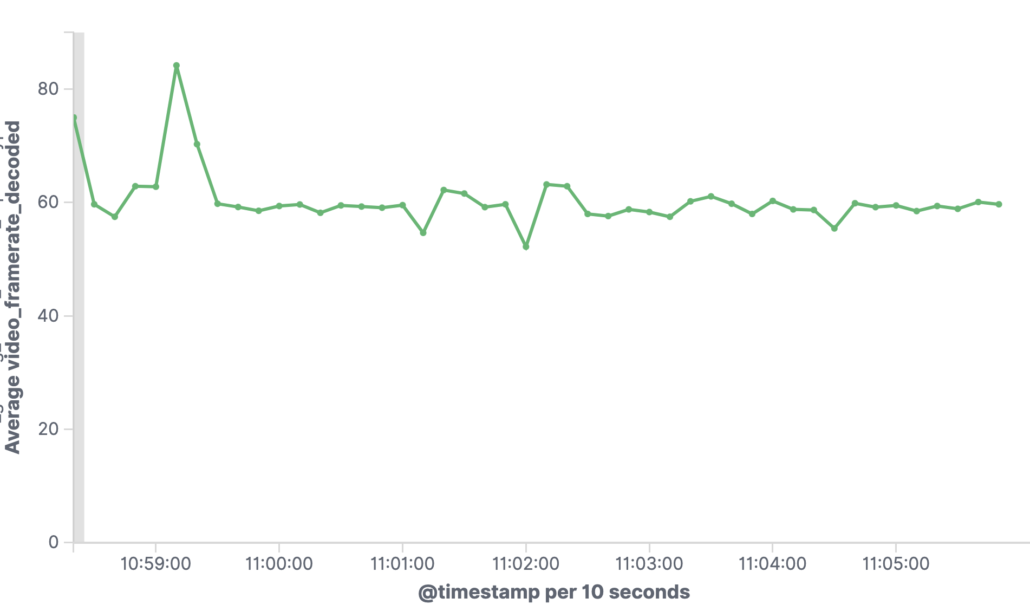

- Framerate

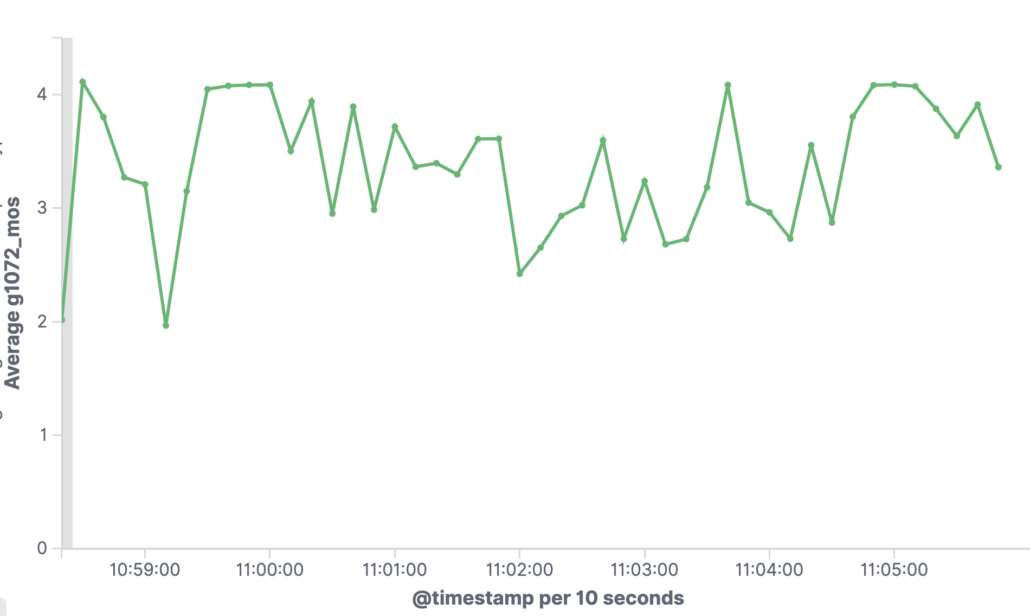

- MOS based on ITU-T Rec. G.1072

The KPIs vary over the course of several minutes of gameplay, as reported by the GQOE messaging format.

Bitrate stays stable at around 20 MBit/s after an initial period of fluctuation. A drop in bitrate could be measured towards the end of the ~5 min session. Here, a lower video resolution was played out.

The latency varies due to the particular video streaming technology chosen — it prioritizes frame integrity over latency, thus leading to a rather low variability in framerate, but a higher variation in latency. This also means that high latency does not lead to frame loss. Latency spikes at around 600–700 ms, which makes the game very hard to play during these instances. For other periods, latency hovers around 100–300 ms.

As explained above, framerate stays mostly constant at around 60 fps.

It can be clearly seen how the variation in latency (and, to some extent, frame rate) directly impacts the MOS, which drops in some periods where it reaches less than 2.5 on a scale from 1–5. Now, keeping in mind that the G.1072 model is not perfectly suited for the type of application we measured, we can still see how the general indicator can be used to track the overall quality of the game, integrating multiple KPIs into one number.

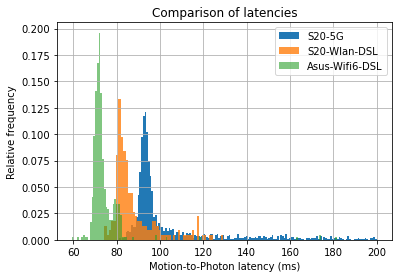

The following figure shows the distribution of motion-to-photon latency values for different network types, all using the same phone and the same VR cloud gaming application. Here, we used a public 5G network — basically directly playing from an office room. It can be seen that the 5G network performs the worst, with average latencies almost reaching 100 ms. Using Wi-Fi and Wi-Fi 6 via the DSL connection clearly outperform the mobile network in terms of latency.

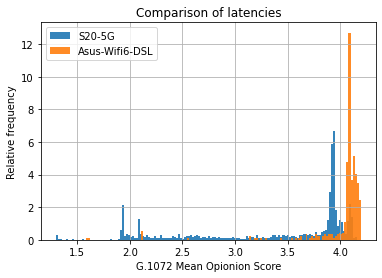

The next figure shows the calculated MOS values according to the ITU-T G.1072 recommendation. Here again the performance difference between fixed-line and 5G can be easily observed.

What we saw was that the regular public 5G network did not offer the expected benefits in terms of low latency and high bandwidth that we would like to see for new applications like gaming. But of course, as we mentioned above, this was just a test to see what we could measure with AVEQ’s GQOE implementation. Further, detailed tests with a dedicated 5G slice just for the gaming traffic and 5G Standalone Radio are ongoing and will show how well new network technologies can be used to improve users’ experiences.

What’s next?

Do you operate a service that uses video and is critically dependent on low latencies, high throughput, or a combination of both? Are you already running a mobile 5G network and planning to measure how well it performs? At AVEQ we don’t just have ready-to-go solutions for measuring streaming video quality, but also the expertise needed to develop custom applications for specific use cases, as we showed in this article.

In the end, the user’s satisfaction is what matters, and you can only quantify that with the right tools at your disposal. Getting a final quality score through the use of QoE models can tremendously help in operationalizing your new service and reacting immediately to service issues. We’re constantly exchanging with standards bodies and forums like ITU-T and VQEG to deliver you the newest in terms of automated QoE measurements.

Don’t hesitate to get in touch with us if you want to find out more — we’d like to hear from you!