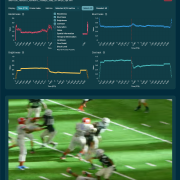

VideoAnalyzer — AVEQ’s High-Performance C++ Toolkit for No-Reference Video Metrics

At AVEQ, a significant part of our work involves the deep analysis of video streams to understand and quantify quality. While our flagship product Surfmeter uses a client-side approach to measure delivery QoE (i.e., how well does the network support streaming?), we also needed a solution to understand video quality at the source. Think, for example, ingesting video streams that might be affected by blockiness or frozen frames. Or verifying encoder output. To address this, we built the AVEQ VideoAnalyzer — and in this post we’re going to give you a sneak preview of it.

Our Goals

Video material isn’t always perfect. Our delivery QoE measurements from the client perspective however assume that OTT services like YouTube or Netflix know how to encode their videos. Yet, when we work with customers from the broadcasting industry, the focus shifts to earlier stages in the delivery pipeline. Ingested video from satellite feeds or user-generated content may have quality issues that then propagate to the encoder and, finally, the end-users. We want to detect and prevent that.

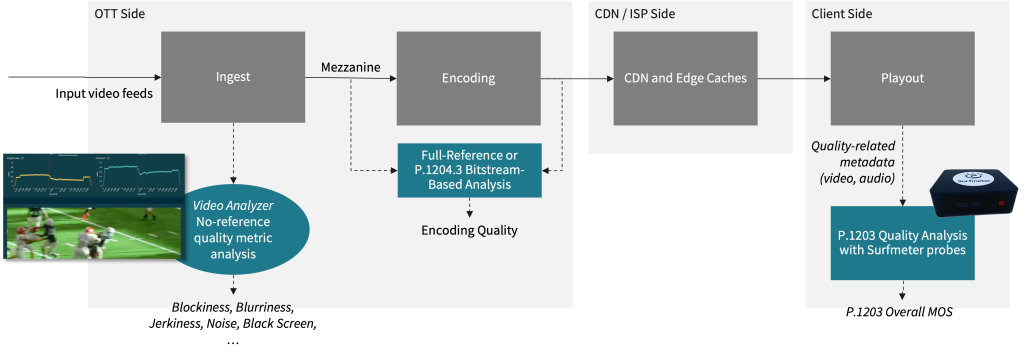

In the following graphic you can see a simplified end-to-end streaming pipeline, and where different types of quality analyses can be performed. Let’s focus on the most well known one: full-reference quality analysis during/after encoding. Here, we compare the original input against the encoded rendition, usually via metrics like PSNR, SSIM, or VMAF. We could also use P.1204.3, which has been shown to outperform VMAF even without a reference (e.g., in this report). Our Surfmeter probes measure the delivery QoE at the very end of the transmission chain — and towards the left, you will find the new use case for a no-reference QoE analysis.

A simplified E2E video delivery pipeline.

Our primary motivation therefore was to create a robust, no-reference video analysis toolkit. To be clear: “no-reference” means that the video quality analysis is done without referencing a “known good” video, instead relying only on the intrinsic properties of the sequence you have at hand. We explained more about the different model types in our previous blog article.

No-reference quality analysis is not an easy feat, as there are many aspects that can impact final video quality. Just think of the differences between a clip that is blurry because of its low resolution, or because of an out-of-focus image. One is a technical encoding aspect, the other is related to the camera operator’s poor focus — or even an artistic intent? Evaluating actual QoE in a no-reference domain is still a largely unsolved problem. Recent results from the ICME Grand Challenge on NR-based evaluation show that there are promising new developments in this space using deep learning (e.g., with Large Vision Models). Still, explainability suffers when you do not have access to fundamental metrics that also engineers can relate to.

From the outset, it was clear that our tool had to be capable of running quick analyses on essential metrics from the command line while also being embeddable as a library into our broader Surfmeter ecosystem in the future. We needed to perform various tasks that existing tools couldn’t handle efficiently:

- Rapid analysis: Quickly analyze video files to identify potential issues like compression artifacts, freezes, or black screens without needing the original source video for comparison.

- Research and development: Provide a platform that can be easily extended with new metrics and that allows rapid iteration on existing metrics.

- Automation: The tool needed to be a command-line utility that could be easily scripted and integrated into automated testing pipelines, outputting structured data (JSON) for easy parsing. This would also help during development and validation of new quality models and algorithms.

- Integration: Beyond the CLI, we needed a C++ library that could be directly integrated into other desktop and mobile applications, offering high performance and low overhead.

Essentially, we aimed to build a foundational component that serves both ad-hoc analysis and as a building block for future R&D and products.

What’s in the Box — Available Metrics

We strongly believe in structured output data and machine-enabled processing. VideoAnalyzer therefore doesn’t have a complicated configuration. It just produces a comprehensive set of per-frame metrics that are output as JSON. Here is a complete list of the currently implemented metrics:

Blockiness

These features measure blocking artifacts. Such artifacts are usually side-effects of too low bitrates and the DCT-based compression in block-based encoders.

blockiness: Measures blocking artifacts (0-1).

blockiness_vp9: A variant of the blockiness metric inspired from the libvpx-vp9 encoder.

Blurriness

Blurriness is caused by upscaling footage, or by intrinsic blurriness in the camera used to capture the content. Also, the footage can have intentional artistic effects including blur.

blurriness: Measures perceptual blurriness (0-1).

Luminance

These features relate to everything regarding the light/dark appearance of the content.

brightness: The average grayscale value of the frame (0-1).

black_screen: A boolean flag that is true if the frame’s brightness is below a certain threshold. Black screen detection is vital for ensuring that ingested content feeds are showing actual content.

white_level / black_level: Detects irregular white and black levels, often indicating auto-enhancement issues from cameras (0-1, higher is worse).

Color

The color-related features check basic color properties. By measuring these metrics for known video assets you can spot degradations in your pipeline.

saturation: Measures the average saturation from the HSV color space (0-1).

contrast: The ratio of the 99th and 1st percentile luminance values (0-1).

contrast_michelson: The Michelson contrast, another method for measuring contrast (0-1).

Spatial Detail

Here, we want to find out how much spatial detail is contained in the video. This, combined with temporal changes, tells you about the complexity of the content.

spatial_information: A measure of spatial complexity based on the ITU-T P.910 recommendation.

fine_detail: Indicates the presence of high-detail textures (0-1, higher is worse).

sum_of_high_frequencies: A metric based on the frame’s radial profile, indicating detail.

noise: An experimental metric to detect video noise.

Temporal Change

Temporal changes include overall motion and scene changes, i.e., activity in the video that may cause it to require higher bitrates during encoding.

temporal_information: A measure of temporal complexity (i.e., amount of motion) based on ITU-T P.910.

freezing: A boolean flag that is true if the frame is identical or nearly identical to the previous one.

scene_change: A boolean flag indicating a likely scene cut.

scene_score: The raw score used to detect a scene change.

sad_normalized: The Sum of Absolute Differences to the previous frame, normalized by pixel count (0-1).

jerkiness: Measures repeated frames within a time window to detect judder (0-1).

As you can see, we cover most of the basics of what an engineer would like to see in their toolkit. This collection of metrics has already allowed us to quickly verify video quality issues across a set of diverse clips.

Android Library

One of the main benefits of the library-based approach is that we can easily port it to Android. Developers can already use the AVEQ VideoAnalyzer library in conjunction with OpenCV to build performant and flexible implementations consuming and analyzing video. Right now, we are partnering with a test automation platform to provide them – and their customers – with our enhanced video QoE analysis.

The primary use case here would be to ingest live feeds from the camera to monitor the quality of devices such as smartphones or connected TVs, in particular when a direct access through HDMI is not available.

Future Plans

VideoAnalyzer is already a powerful asset for us, but we see a clear path forward for its future development. There are three activities we are planning for now: new metrics, and an overall No-Reference QoE model.

First of all, several important metrics will be added to the feature set:

- Banding detection: Compression of colors in terms of gamut can lead to visible banding artifacts. Here, metrics like CAMBI are valuable tools.

- Panning speed and camera jiggle analysis: Global camera jiggle or too high pan speeds (i.e., global, uniform motion) are something viewers don’t like. While not encoding-related, such metrics may help to understand low overall QoE when judged by viewers.

- More sophisticated color-related metrics: Detecting peculiar colors or pallidness is another aesthetics-related feature that we want to integrate.

- Alternative overall quality estimators: NIQE and BRISQUE are well-known metrics that are not extremely accurate for the NR video use case, but still nice to have as additional indicators.

Then, the main question: with all these metrics, what’s the actual quality? This is the biggest feature that’s still on our roadmap – the quest for an overall QoE metric, that is, a single score that gives you the quality of the video sequence. We are partnering with research teams from TU Ilmenau and RWTH Aachen to deliver a comprehensive QoE metric based on the core features that our video analyzer implements, and deep learning-based models to incorporate content-related aspect. Stay tuned for more!

Ready to start measuring video quality from the source? Need to integrate advanced measurement capabilities into your products? Get in touch with us!